Unlock Language Insights

Explore practical applications, tools, and techniques for AI language model development and deployment.

Language Model Insights

Explore the intricacies of language models and their practical applications in various environments.

Model Evaluation

Deployment Strategies

Learn about cloud and edge deployment techniques for efficient model utilization and monitoring.

Optimize On-Device Workloads

Techniques for effective quantization and resource management in on-device applications.

Assess performance, benchmarks, and safety fixes for language models in real-world scenarios.

Language Models

Explore the intricacies of language model technologies.

Tokenization Insights

Understanding tokenization in language models.

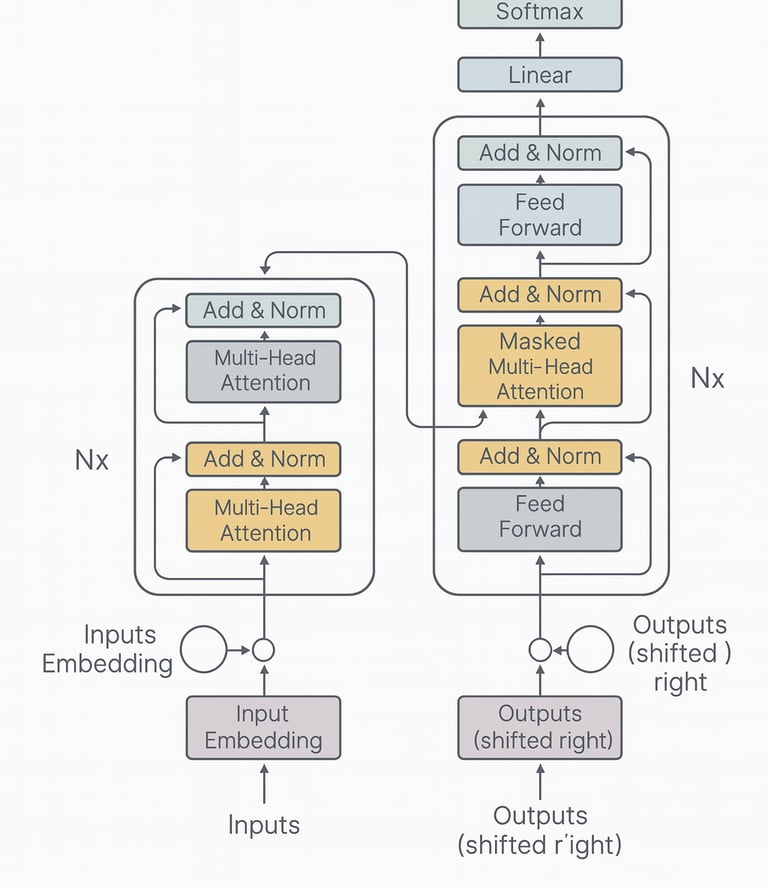

Attention Mechanisms

Dive into attention mechanisms and their applications.

LLMs & SLMs

What to use where: SLMs for on-device, privacy-sensitive tasks; LLMs for complex, retrieval-heavy workflows. We publish benchmarks, cost models, and deployment patterns that teams can reproduce.

FAQ

What are language models?

Language models are algorithms that understand and generate human language, utilizing techniques like tokenization and attention.

How does tokenization work?

Tokenization breaks text into smaller units, enabling models to process and understand language more effectively.

What is fine-tuning?

Fine-tuning adjusts a pre-trained model on specific tasks, improving its performance and relevance for particular applications.

What are quantization methods?

Quantization methods reduce model size and improve efficiency, making them suitable for on-device workloads.

How to monitor performance?

Monitoring performance involves tracking latency, memory usage, and cost to ensure optimal model operation.

What is responsible use?

Responsible use refers to ethical practices in deploying language models, ensuring privacy and minimizing biases in applications.